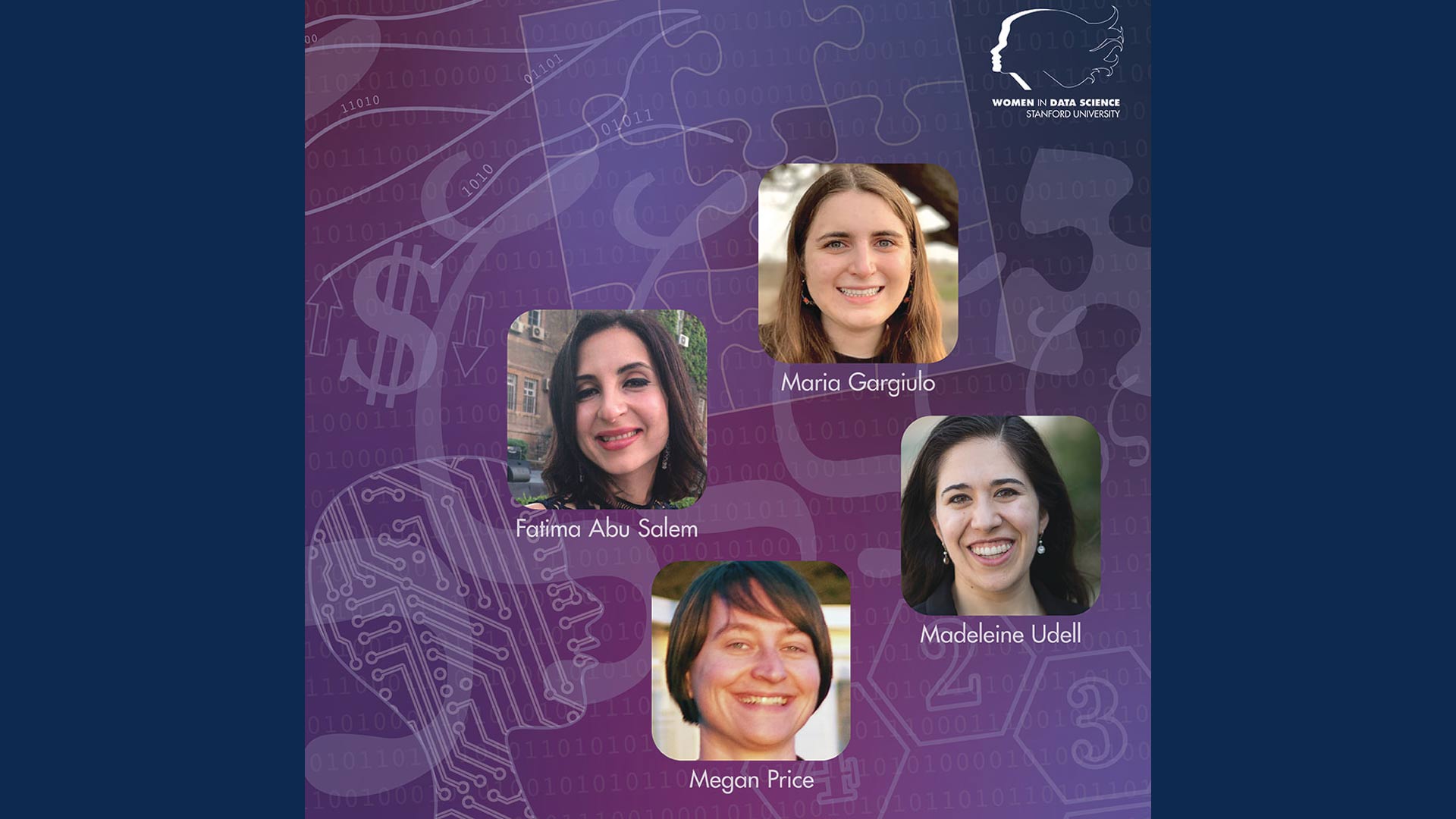

Madeleine Udell, Assistant Professor, Cornell University, @madeleineudell sits down with Lisa Martin at Stanford University for WiDS 2019.

#WiDS2019 #CornellUniversity #theCUBE

https://siliconangle.com/2019/03/08/t…

This professor is cleaning up tech’s ‘messy data’ problem

Strong data sets are table stakes for any organization today. Data insights can provide the tentpoles for building a strategic roadmap and offer unexpected learnings for businesses to leverage as new market opportunities. But even the most valuable data set can prove worthless if its insights are entangled in the unstructured digital void.

An estimated 80 percent of all data is unstructured, which renders the intel buried in its complex documents and media files inaccessible without an alternative method of analysis. As information floods the tech industry faster than new talent is prepared to make sense of it, the unstructured data challenge is posing a formidable hurdle for businesses in the digital age.

Madeleine Udell (pictured), assistant professor of operations research and information engineering at Cornell University, is educating a new era of technologists to decode this so-calledmessy data” with a more effective approach to tech collaboration.

Oftentimes people only learn about big, messy data when they go to industry,” Udell said.I’m interested in understanding low dimensional structure in large, messy data sets [to] figure out ways of … making them seem cleaner, smaller and easier to work with.”

Udell spoke with Lisa Martin, host of theCUBE, SiliconANGLE Media’s mobile livestreaming studio, during the recent Stanford Women in Data Science event at Stanford University.

This week, theCUBE spotlights Madeleine Udell in its Women in Tech feature.

The unstructured data challenge

The rise of messy data can be attributed in large part to the influx of information from a growing number of digital endpoints. Internet of things devices deliver a stream ofmessy” data, but the clutter can also come from images, videos, social media, emails, and other data sets not already formatted for simple analysis.

Though more complex and tedious to decipher, these data sources are some of the most highly valued in a market focused on individual user targeting. That gap between ability and potential innovation is what drives Udell’s interest in unstructured data, an area of technology the assistant professor says people entering the tech industry are not adequately prepared for. In her own classes, Udell teaches optimization for machine learning from a messy data perspective.

[The class] introduces undergraduates to what messy data sets look like, which they often don’t see in their undergraduate curriculum, and ways to wrangle them into forms they could use with other tools they have learned as undergraduates,” she said.

Udell’s interest in messy data was piqued when she met the challenge head on working in the Obama 2012 presidential campaign. She was tasked with analyzing voter information but found the unstructured data sets too cumbersome to yield valuable insight.

They had hundreds of millions of rows, one for every voter in the United States, and tens of thousands of columns about things that we knew about those voters,” Udell said.Gender … education level, approximate income, whether or not they had voted in the last elections, and much of the data was missing. How do you even visualize this kind of data set?”

When Udell returned to work on her Ph.D., she was intent on discovering a more efficient method for parsing out value from unstructured data sets.I wanted to figure out the right way of approaching this, because a lot of people will just sort of hack it,” she saidI wanted to understand what’s really going on.”

Making an impact with communication

Udell is as interested in the technical architectures that enable data analysis as she is in supporting organizations through the implementation processes that will allow them to benefit from her work. A comprehensive answer to data management requires both math and communication, and Udell says her broad skill set is part of what has enabled her to make sense of messy data.

If you want your technical work to have an impact, you need to be able to communicate it to other people,” Udell stated.

The social aspect of her role is crucial to finding solutions that actually address user problems and work within existing processes.You need to make … sure you’re working on the right problems, which means talking with people to figure out what the right problems are,” she said.This is … fundamental to my career, talking to people about problems they’re facing that they don’t know how to solve.”

…

Here’s the complete video interview, part of SiliconANGLE’s and theCUBE’s coverage of the Stanford Women in Data Science event: